Challenges in developing agents that follow instructions in terrestrial environments include sample efficiency and generalizability. These agents need to learn effectively from several demonstrations while performing well in new environments with new instructions after training. Techniques such as reinforcement learning and imitation learning are commonly used, but they rely on trial and error and expert guidance, often requiring large numbers of trials and expensive expert demonstrations. Become.

Following language-based instructions, the agent receives instructions and partial observations in the environment and performs actions accordingly. Reinforcement learning involves receiving a reward, while imitation learning involves imitating the actions of an expert. Behavioral cloning, unlike online imitation learning, collects offline expert data to train policies to assist in long-term tasks in terrestrial environments. Recent research shows that when large-scale language models (LLMs) are pre-trained, sample-efficient learning via prompts and in-context learning across textual and grounded tasks, including robot control, is possible. has been proven to show. Nevertheless, existing methods for conducting instruction according to grounded scenarios rely on online his LLM during reasoning, which is impractical and costly.

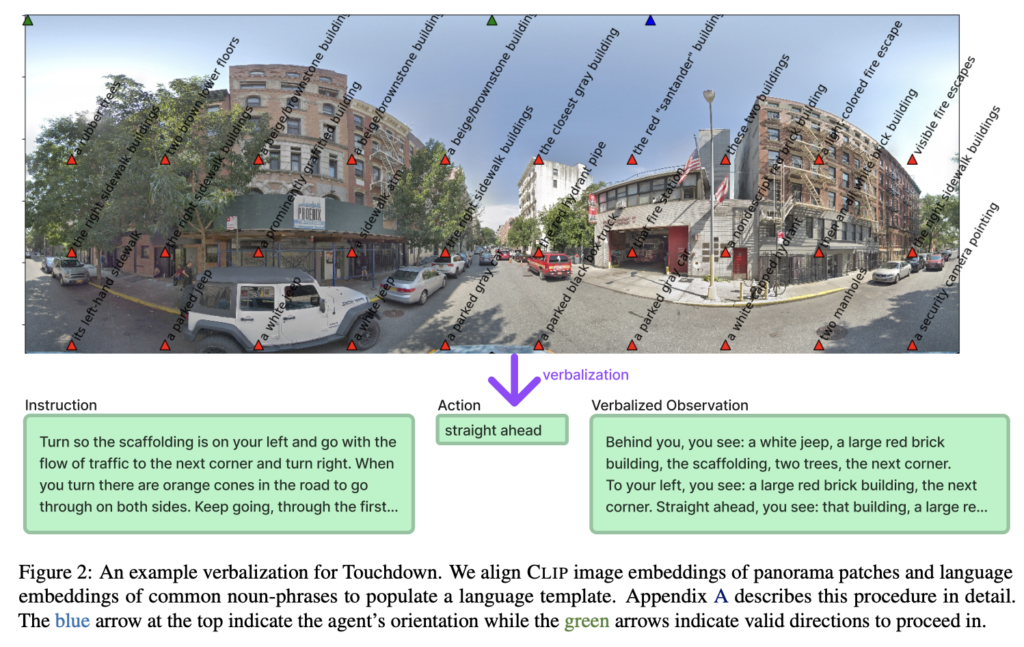

Researchers from Microsoft Research and the University of Waterloo have proposed a linguistic feedback model (LFM) for policy improvement in instruction. LFM■ Leverage LLM to provide feedback on agent behavior in a grounded environment and help identify desired actions. By concisely summarizing this feedback, LFM,This technique enables sample-efficient and cost-effective policy,improvements without continuous reliance on LLM. LFM■ Generalizes to new environments and provides interpretable feedback for human validation of imitation data.

The proposed method introduces the following: LFMEnhance your policy learning with the following instructions: LFMLeverage LLM to identify productive actions from base policies and facilitate batch imitation learning for policy improvement. By compactly extracting world knowledge from LLM, LFMThis approach provides sample-efficient and generalizable policy expansion without requiring ongoing online interaction with expensive LLMs during deployment. Instead of using LLM at each step, we modify the procedure to collect LLM feedback in batches over time to achieve a cost-effective linguistic feedback model.

They used GPT-4 LLM for action prediction and feedback in their experiments and fine-tuned 770M FLANT5 to obtain policy and feedback models. By utilizing LLM, LFMIdentify productive behaviors and enforce policies without ongoing LLM interactions. LFM■ It performs better than direct use of LLM, generalizes to new environments, and provides interpretable feedback. These provide a cost-effective means to improve policies and foster user trust. whole, LFMSignificantly improve policy performance and demonstrate effectiveness in evidence-based instruction:

In conclusion, researchers from Microsoft Research and the University of Waterloo proposed a linguistic feedback model. LFM It excels at identifying desirable behaviors for imitative learning across a variety of benchmarks. These outperform baseline methods and LLM-based expert imitation learning without continuous use of LLM. LFMgeneralizes well and results in significantly improved policy adaptation in new environments. Furthermore, it provides detailed feedback that can be interpreted by humans, promoting trust in imitation data. Future research may consider leveraging detailed LFM for RL reward modeling to create policies that are reliable with human validation.

Please check paper. All credit for this study goes to the researchers of this project.Don’t forget to follow us twitter and google news.participate 37,000+ ML SubReddits, 41,000+ Facebook communities, Discord channeland linkedin groupsHmm.

If you like what we do, you’ll love Newsletter..

Don’t forget to join us telegram channel

Asjad is an intern consultant at Marktechpost. He is pursuing a degree in mechanical engineering from the Indian Institute of Technology, Kharagpur. Asjad is a machine learning and deep learning enthusiast and is constantly researching applications of machine learning in healthcare.