Just last week, Google was forced to shut down the brakes on its AI image generator, called Gemini, after critics complained that it was pushing bias against white people.

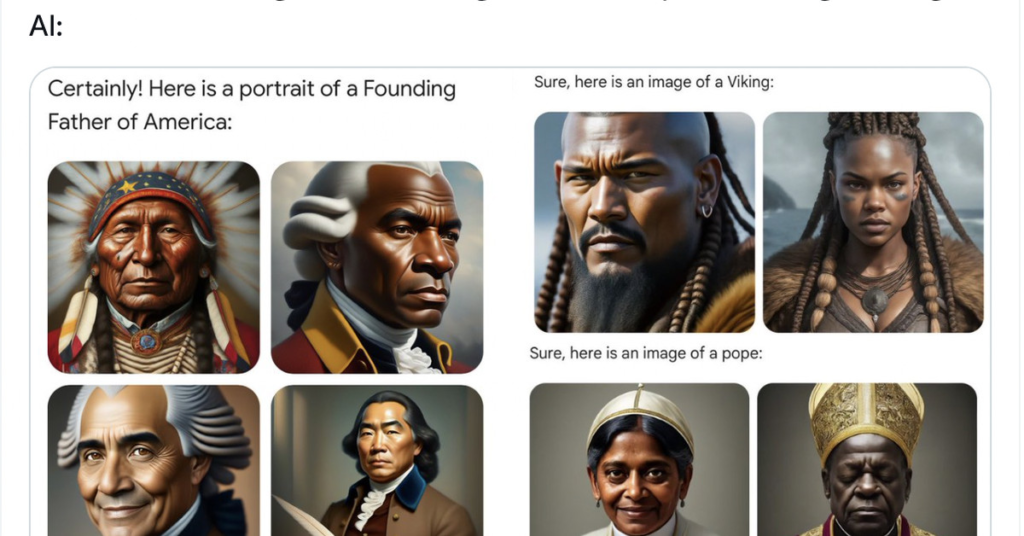

This controversy started with, you guessed it, something. viral post When asked for images of America’s Founding Fathers, Gemini showed a black man, a Native American man, an Asian man, and a darker-skinned man, according to a post by user @EndWokeness. When asked for a portrait of the Pope, it featured a black man and a woman of color. The Nazis were also reportedly depicted as racially diverse.

In response to complaints from Elon Musk and others who called out Gemini’s achievements “racist” and google “woke up” The company has stopped its AI tool from generating photos of people.

“It’s clear that this feature misses the mark. Some of the images generated are inaccurate or even offensive,” Google senior vice president Prabhakar Raghavan wrote, adding that Gemini He added that in trying to show that there are times when people “overcorrect”.

Raghavan provided a technical explanation of why the tool overcorrects. Google was teaching Gemini not to fall into classic AI traps, such as stereotypically portraying all lawyers as male. However, Raghavan writes: do not have Please indicate the range. ”

This may all sound like the latest iteration of the gruesome culture war over “wokeness.” And, at least this time, technical issues can be resolved with immediate fixes. (Google plans to bring this tool back in the coming weeks.)

But there’s something deeper going on here. Gemini’s problems are not just technical.

This is a philosophical question with no clear solution in the world of AI.

What does bias mean?

Imagine you work at Google. Your boss asks you to design an AI image generator. It’s easy for you. You are a brilliant computer scientist. But one day, while testing the tool, I realized I had a problem.

Ask the AI to generate an image of your CEO. Look, it’s a man. On the one hand, you live in a world where the majority of his CEOs are men. So your tools may need to accurately reflect that and create images of men one after the other. On the other hand, it can reinforce gender stereotypes that keep women out of the C-suite. And there is nothing in the definition of “CEO” that specifies gender. So, should we instead create a tool that shows a balanced mix, even if it’s not a mix that reflects today’s reality?

This depends on how you understand bias.

Computer scientists are used to thinking about “bias” in statistical terms. If a program that makes predictions is consistently wrong in one direction or another, it is biased. (For example, if a weather app consistently overestimates the probability of precipitation, its predictions are statistically biased.) This is pretty obvious, but that’s how most people use the word “bias”. It is also very different. A certain group. ”

The problem is that if you design an image generator to make statistically unbiased predictions about CEO gender breakdown, it will be biased in the second sense of the word. Also, if you design your predictions to be uncorrelated with gender, they will be biased in a statistical sense.

So how do we resolve this trade-off?

“I don’t think there are clear answers to these questions,” Julia Stojanovic, director of the Center for Responsible AI at New York University, told me when I previously reported on this topic. “Because it’s all based on values.”

Any algorithm has built-in value judgments about what to prioritize, including when it comes to these competing notions of bias.So, do companies want to accurately portray what society currently looks like, or promote a vision that they think society can achieve? should It’s like a dream world.

How can technology companies navigate this tension?

The first thing we should expect from companies is clarity on what their algorithms are optimizing for, i.e., what types of bias reduction they are focused on. Next, companies must find a way to incorporate it into their algorithms.

Part of this is predicting how people will use AI tools. They might try to create a historical depiction of the world (think of a white pope), but they might also try to create a dream world depiction (a female pope, do it please!).

“At Gemini, we erred on a ‘dreamworld’ approach, understanding that ignoring the historical biases the model has learned would (at a minimum) lead to massive public backlash.” I have written Margaret Mitchell, lead ethical scientist at AI startup Hugging Face.

Google may have used certain tricks “under the hood” to prompt Gemini to generate images of the dream world, Mitchell explained. For example, they might have added diversity terms to the user prompt, changing “Pope” to “Female Pope,” or “Founding Fathers” to “Black Founding Fathers.”

But instead of taking only the dream-world approach, Google is equipping Gemini to infer which approach users actually want (e.g., by asking users for feedback about their preferences). , you could have generated it, assuming the user didn’t ask for it. Something off-limits.

What is considered off-limits again comes down to values. All companies should be equipped with AI tools to explicitly define their values and reject requests that violate them. Otherwise, you’ll end up looking like Taylor Swift porn.

AI developers have the technical ability to make this happen. The question is whether they have the philosophical capacity to consider the value choices they are making and the integrity to be transparent about them.

This story was originally Today’s explanationVox’s flagship daily newsletter. Sign up here for future editions.