Generative AI is not built to honestly reflect reality, no matter what its creators say.

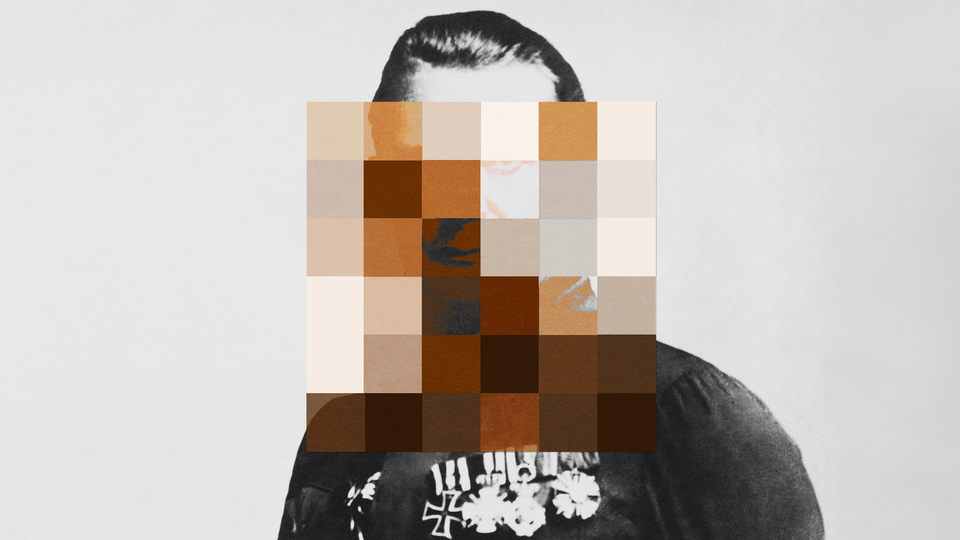

do you have right Is there a way for Google’s generated AI to create fake images of Nazis? According to the company, it seems like it is. Gemini, Google’s answer to ChatGPT, was shown last week to generate an absurd range of racially and gender-diverse German soldiers in Wehrmacht garb. Unsurprisingly, it was ridiculed for not creating any images of Nazis who were actually white. Pursuing further, they seemed to actively resist producing images of whiteness altogether.The company ultimately I apologized It has suspended the ability to generate images featuring Gemini figures due to “inaccuracies in the depiction of some historical image generation.”

The situation was featured on the cover for laughs. new york post and elsewhere, and Google did not respond to requests for comment, but Said I was trying to resolve the issue. Google senior vice president Prabhakar Raghavan explained in a blog post that the company intentionally designed its software to generate a more diverse representation of people, but that backfired. He added: “While we cannot promise that Gemini will not occasionally produce embarrassing, inaccurate, or offensive results, we can promise that we will continue to take action whenever a problem is identified.” .

Google and other generative AI creators are in a bind. Generative AI is hyped not because it produces truthful or historically accurate representations; Because ordinary people can instantly generate fantastical images that match a given prompt.bad actors everytime These systems may be exploited. (See: AI-generated image of SpongeBob flying a plane toward the World Trade Center.) Google may be injecting Gemini with what I call “holistic inclusion,” or the technological sparkle of diversity. It injects no bots or data, but is trained to comprehensively reflect reality. Instead, it translates a set of priorities established by product developers into code that interests users, but does not view them all equally.

This is an old problem, diagnosed by Safiyah Noble in her book algorithm of suppression. Noble was the first to comprehensively explain how modern programs targeting online advertising can “disenfranchise, marginalize, and misrepresent” people on a large scale. It’s one of his. Google products are often involved. In what is now a classic example of algorithmic bias, in 2015 a black software developer named Jackie Alsine accused Google Photos’ image recognition service of labeling him and his friends “gorillas.” He posted a screenshot showing this on Twitter. The fundamental problem of how this technology could perpetuate racist tropes and prejudices was never resolved, but instead simply glossed over. Last year, long after the first incident, new york times The study found that Google Photos still doesn’t allow users to “visually search for primates for fear of making an unpleasant mistake and labeling people as animals.” This still seems to be the case.

“Racially diverse Nazis” and racially mislabeling black men as gorillas are two sides of the same coin. In each example, a product is rolled out to a huge user base, but only that user base, not Google staff, discovers that the product contains racist flaws. This glitch is the legacy of a technology company determined to offer a solution to a problem people didn’t know existed. The problem is that you can’t visually represent anything you can imagine or search for a particular concept among thousands of digital photos. .

Being built into these systems is a mirage. It does not inherently mean more fairness, accuracy, or justice. In the case of generative AI, erroneous or racist outputs are usually caused by inadequate training data, specifically a lack of diverse datasets, which results in the system being less stereotypical or discriminatory. You will be reproducing the content. On the other hand, those who criticize AI for being too “woke” want These systems have the ability to spew out racist, anti-Semitic, and transphobic content, as well as people who don’t trust tech companies to make good decisions about what they allow. , complains that restrictions on these technologies are effectively “lobotomizing” them. technology. This concept promotes the anthropomorphism of technology in a way that overestimates what is happening inside. These systems have no “mind,” no self, or even a sense of right and wrong. Imposing safety protocols on AI would “lobotomize” it, in the same way that imposing emissions standards or seat belts on cars would inhibit its human capabilities.

All of this begs the question, what is the best use case for something like Gemini in the first place? Are we really lacking in historically accurate depictions of the Nazis? These generative AI products are increasingly positioned as gatekeepers to knowledge, but they are not yet there. We may soon see a world where services like Gemini restrict access to and contaminate information. Definitions of AI vary widely. It can be understood in different ways as a mechanism of extraction and surveillance.

We should expect Google, and all generative AI companies, to do better. But solving the problem of image generators creating bizarrely diverse Nazis will rely on a temporary solution to a much more serious problem. That is, algorithms inevitably perpetuate some bias. When we turn to these systems for accurate representation, we end up looking for a comforting illusion, an excuse to ignore the machines that shatter reality into tiny pieces and rearrange them into bizarre shapes. This means that