The growth of artificial intelligence (AI), led by Transformers, spans everything from conversational AI applications to image and video generation. However, traditional symbolic planners have maintained an advantage in complex decision-making and planning tasks due to their structured, rule-based approach.

The current issue revolves around the inherent limitations of current Transformer models in solving complex planning and reasoning tasks. Despite lacking the nuanced understanding of natural language that Transformers provides, traditional methods are better at planning tasks with systematic search strategies, often guaranteeing optimality.

While existing work leverages synthetic datasets to learn powerful policies for inference, this work focuses on improving the inference capabilities built into the Transformer weights. Algorithms such as AlphaZero, MuZero, and AlphaGeometry treat neural network models as black boxes and use symbolic planning techniques to improve the network. Techniques such as thought chains and thought tree prompts have shown promise, but they also have limitations, such as inconsistencies in performance across different task types and datasets.

Meta’s research team introduced Searchformer, a new Transformer model that significantly improves planning efficiency for complex tasks like Sokoban puzzles. Unlike traditional approaches, Searchformer combines the strengths of Transformers with the structured search dynamics of symbolic planners for a more efficient planning process.

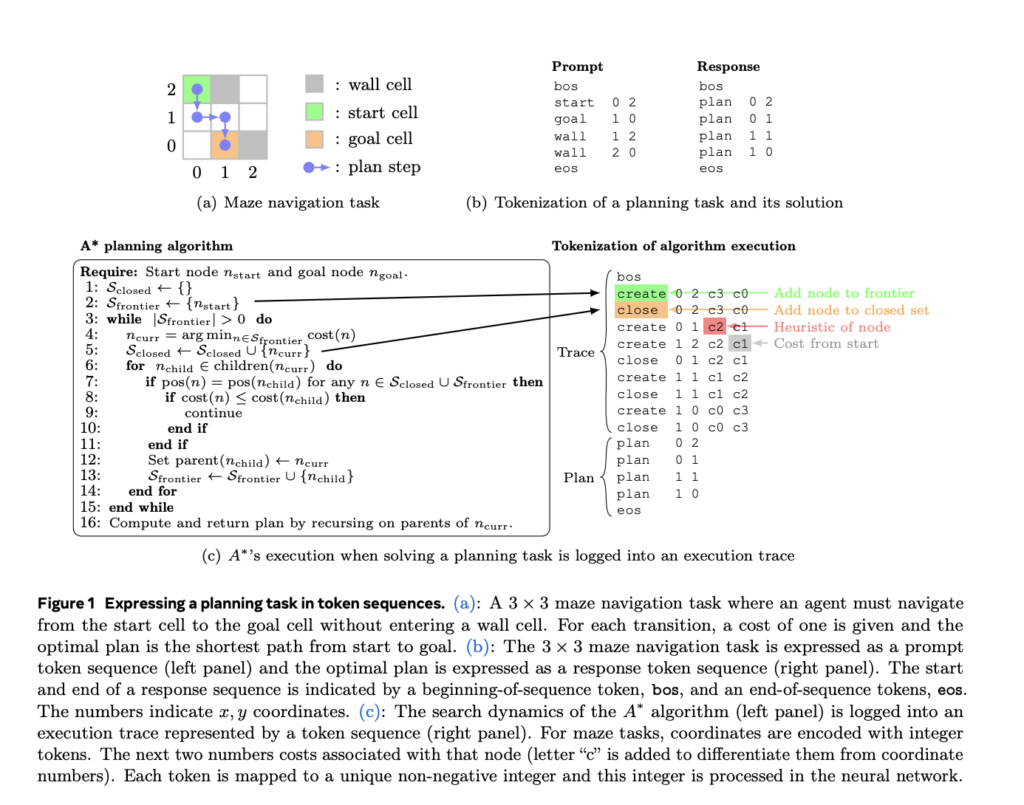

Searchformer can solve complex planning tasks more efficiently than traditional planning algorithms such as A* search. This will train him in two steps. First, it is trained to mimic the search procedure of A* search using a synthetic dataset generated from randomly generated planning task instances. In the second step, the model is further refined using expert iterations so that the Transformer generates fewer search steps while finding the best solution. Two token sequences were generated. One had enhanced search dynamics, and the other focused solely on solutions. The researchers aimed to understand the computational process of A* by training a Transformer model to predict these sequences. Further improvements include fine-tuning these models on datasets of increasingly shorter sequences that still yield optimal results and improve efficiency by reducing the search steps required to solve the problem. has improved significantly.

The performance evaluation took into account various metrics such as percentage of tasks solved, percentage of optimal solutions, cost-weighted success (SWC), and improved length ratio (ILR). Search extension and Searchformer models perform better on these metrics than solution-only models. Optimally solves never-before-seen Sokoban puzzles 93.7% of the time with up to 26.8% fewer search steps than standard A* search. It also performs better than the baseline on maze navigation tasks with a model size 5-10 times smaller and a training dataset 10 times smaller.

In conclusion, Searchformer represents a significant step forward in AI planning and offers a glimpse into a future where AI can navigate complex decision-making tasks with unprecedented efficiency and accuracy. By addressing planning challenges in AI, the research team lays the foundation for more capable and efficient AI systems. Their research deepens our understanding of the potential of AI in solving complex problems and sets the stage for future developments in this field.

Please check paper. All credit for this study goes to the researchers of this project.Don’t forget to follow us twitter and google news.participate 38,000+ ML subreddits, 41,000+ Facebook communities, Discord channeland linkedin groupsHmm.

If you like what we do, you’ll love Newsletter..

Don’t forget to join us telegram channel

You may also like Free AI courses….

Nikhil is an intern consultant at Marktechpost. He is pursuing an integrated dual degree in materials from the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast and is constantly researching applications in areas such as biomaterials and biomedicine. With a strong background in materials science, he explores new advances and creates opportunities to contribute.