Meta, the parent company of Facebook and Instagram, is ramping up its strategy to counter disinformation ahead of the crucial EU elections. With the election just months away, Meta is rolling out new efforts to curb the spread of misinformation and manipulation on its platform. These efforts include establishing an EU-specific election operations center, expanding our network of fact-checking partners, and developing tools to detect and label AI-generated content.

Meta, the parent company of Facebook and Instagram, is ramping up its strategy to counter disinformation ahead of the crucial EU elections. With the election just months away, Meta is rolling out new efforts to curb the spread of misinformation and manipulation on its platform. These efforts include establishing an EU-specific election operations center, expanding our network of fact-checking partners, and developing tools to detect and label AI-generated content.

The stakes are high as June’s elections will shape the future of the European Union at a critical juncture. Voter manipulation tactics can sway the results, especially with the advent of new technologies like deepfakes that make disinformation more persuasive.

Meta has been under intense scrutiny for election interference since Russian trolls used the platform to sow discord in the U.S. presidential election in 2016. The company has since invested billions of dollars in safety and security measures and taken transparency measures for political advertising.

But experts warn that Mehta’s plan may not be enough to effectively combat disinformation. According to recent reports, the company failed to capture a coordinated influence campaign originating from China targeting Americans ahead of the 2022 midterm elections.

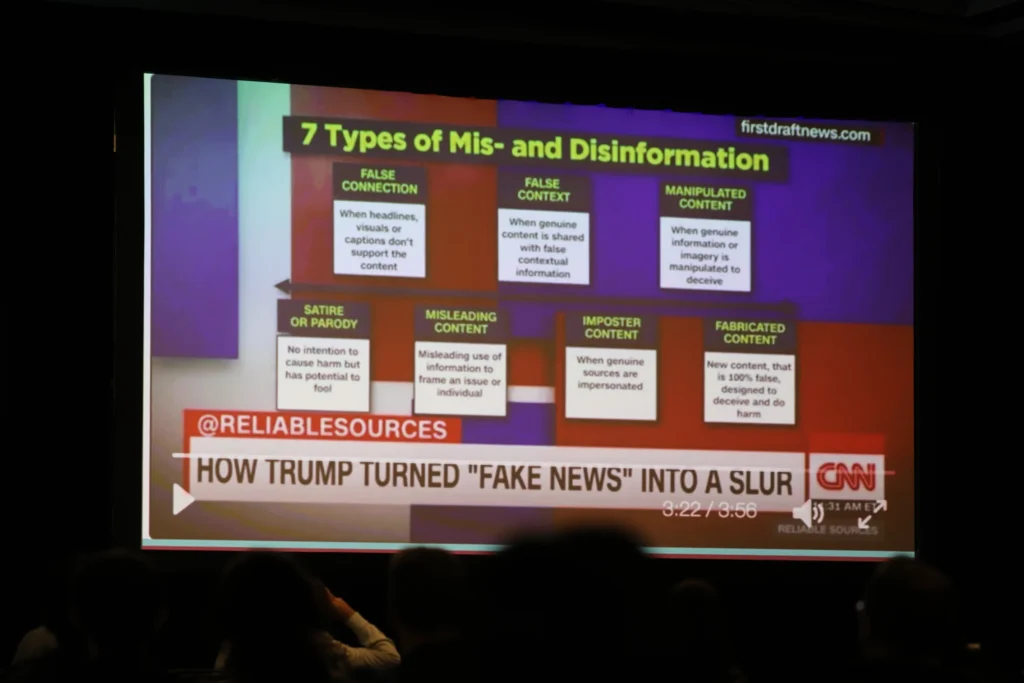

Meta has expanded its fact-checking network to cover all 24 official EU languages and requires disclosure of AI-generated content, but critics say these efforts are ineffective. No clear system has yet been established to reliably authenticate images and videos that appear to show violent conflicts between groups. Debunking convincing fake footage can be difficult with sophisticated editing software.

Even Meta’s addition of three more fact-checking partners seems insufficient given the scale of the threat. The entire network of 29 organizations across Europe is likely to struggle to cope with the expected flood of misinformation about such an important vote.

Additionally, experts question how Meta’s planned transparency labels for AI-generated content can confidently identify manipulated media like deepfakes. Currently, there is no reliable technology that can detect AI counterfeiting with complete accuracy.

One of the major vulnerabilities that past influence operations have exploited is the use of authentic voices, such as politicians, journalists, and others with large followings, to amplify divisive narratives. . As high-stakes elections approach in 80 countries this year, even small-scale disinformation efforts can have broader impact if amplified by public figures and those in positions of authority. there is a possibility.

Ben Nimmo, Global Threat Intelligence Lead at Meta, explains that covert influence campaigns penetrate authentic communities by bringing real people into the audience. This remains a significant vulnerability, and even just a few shares by a credible person can lend legitimacy to false narratives related to foreign interference.

With important EU elections just around the corner, Meta remains on high alert. But as deepfake technology evolves, fighting disinformation in the social media age will only become more complex. Mehta’s plan represents an important step forward, but defending democracy will continue to be an uphill battle. Authentic voices with power and influence will continue to be prime targets for manipulation.

In conclusion, strengthening Meta’s counter-disinformation strategies before the EU elections is crucial to safeguard democratic processes. However, experts have expressed concerns about the effectiveness of these measures, highlighting their limitations in detecting fake content and false assaults. As technology advances, disinformation campaigns also become more sophisticated, requiring platforms like Meta to continually adapt and improve their strategies. Combating disinformation in the age of social media remains an ongoing challenge, requiring constant vigilance and cooperation between platforms, fact-checkers, and governments to protect democracy and ensure fair elections.