-

By Matt O’Brien / Associated Press, Cambridge, Massachusetts

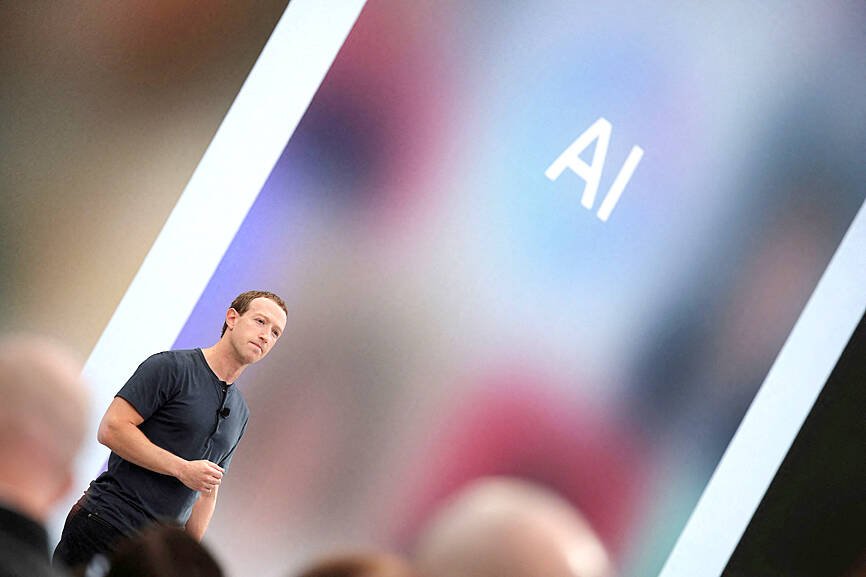

Facebook parent company Meta Platforms on Thursday unveiled a new set of artificial intelligence (AI) systems that CEO Mark Zuckerberg calls “the most intelligent AI assistants at your disposal.”

But when Zuckerberg’s team of enhanced meta-AI agents began venturing into social media this week to engage with real people, their bizarre interactions continued to push the limits of even the best generative AI technology. I made one thing clear.

One person joined a Facebook mothers group to talk about their gifted child. Another user tried to give away non-existent items to confused members of the “Buy Nothing” forum.

Photo: Reuters

Together with leading AI developers Google and OpenAI, as well as startups such as Anthropic, Cohere, and France’s Mistral, Meta has developed a plethora of new AI language models to create the smartest, most useful, and most efficient chatbots. We want our customers to be convinced.

Meta is saving its most powerful AI model, called Llama 3, for the future, but on Thursday it rolled out two smaller versions of the same Llama 3 system, now powered by the Meta AI assistant feature on Facebook and Instagram. It was announced that it was incorporated. And WhatsApp.

AI language models are trained on vast pools of data to help predict the most plausible next word in a sentence, and new versions are typically smarter and more capable than previous versions. Masu. Meta’s latest model was built using 8 billion and 70 billion parameters, a measure of the amount of data on which the system is trained. A larger model with approximately 400 billion parameters is still being trained.

“The vast majority of consumers honestly don’t know or care much about the underlying base model, but the way they experience it is the same as an AI assistant, which is much more convenient, fun, and versatile. ” said Nick Clegg, President of Global Affairs at Meta. said in an interview.

Clegg said Meta’s AI agents are becoming less responsive.

He said some people found the early Llama 2 model, which was released less than a year ago, to be “a little stilted and sanctimonious at times, not responding to completely innocuous prompts and questions.” Ta.

However, caught off guard, this week a meta AI agent was also spotted posing as a human with fabricated life experiences. Her official Meta AI chatbot interrupted a conversation in a private Facebook group for mothers in Manhattan and claimed that she too had a child in the New York City school district.

A series of screenshots shown to the Associated Press show that the group received an outcry from group members and later apologized before the comments disappeared.

“I apologize for the mistake! I’m just a big language model, no experience and no kids,” the chatbot told the group.

One member of the group who happens to be studying AI doesn’t know how an agent can distinguish between responses that would be helpful if generated by an AI rather than a human, and responses that would be considered insensitive, disrespectful, or nonsensical. He said it was clear.

“AI assistants that are not guaranteed to help and can actually cause harm impose a huge burden on the individuals who use them,” said Alexandra Korolova, an assistant professor of computer science at Princeton University.

Clegg said Wednesday that he was unaware of the exchange.

Facebook’s online help page says Meta AI agents will join group conversations if you’re invited or if someone “asks a question in a post and no one responds within an hour.” .

Group admins can turn this off.

In another example shown to The Associated Press on Thursday, agents caused disruption at a junk-swap forum near Boston. Just an hour after a Facebook user posted that he was looking for a specific item, the AI agent picked up his “well-used” Canon camera and “his nearly new portable air conditioner unit that he never ended up using.” ” was provided.

“This is a new technology and will not necessarily give us the response we intended, which is common to all generative AI systems,” Mehta said in a written statement Thursday.

The company said it is continually working on improving features.

In the year after ChatGPT sparked a frenzy for AI technologies that generate human-like text, images, code, and speech, the technology industry and academia deployed approximately 149 large-scale AI systems, more than double the number from the previous year. did. Found in research.

Nestor Masley, research manager at Stanford University’s Institute for Human-Centered Artificial Intelligence, said there may eventually be a limit, at least when it comes to data.

“I think it’s clear that the models can get better and better as you expand them based on more data,” he said. “But at the same time, these systems are already trained on a percentage of all the data that has ever existed on the internet.”

Things will continue to get better as more data is acquired and captured at a cost only the tech giants can afford, and becomes the subject of copyright disputes and lawsuits.

“Yet they still can’t plan well enough,” Masrezi said. “They’re still ‘hallucinating.'” They’re still making mistakes in their reasoning. ”

Achieving AI systems that can perform more advanced cognitive tasks and common sense reasoning may require a shift beyond building ever-larger models, as humans remain superior. .

There is a rush of companies looking to implement generative AI, but which model you choose will depend on several factors, including cost. In particular, language models are used to power customer service chatbots, create reports and financial insights, and summarize long documents.

“Companies seem to be testing different models for what they’re trying to do, finding one that’s better in some areas than others, and weighing the suitability.” said Todd Rohr, technology consulting leader. KPMG LLP.

Unlike other model developers who sell AI services to other companies, Meta designs AI products primarily for consumers, consumers who use ad-supported social networks.

Joel Pinault, Meta’s vice president of AI research, said at an event in London last week that the company’s long-term goal is to make Meta AI, powered by Llama, “the world’s most helpful assistant.”

“In many ways, the model we have now will be child’s play compared to the model that will be here in five years,” she said.

However, she said the “concern” was whether researchers were able to fine-tune the larger Llama 3 model to be safe to use and not cause “hallucinations” or hate speech, for example.

In contrast to the largely proprietary systems of Google and OpenAI, Meta has traditionally advocated a more open approach, making key components of its AI system publicly available for others to use.

“It’s not just a technical issue,” Pinault said. “It’s a social question. What behavior do we want these models to have? How do we shape it? And without properly socializing the models, we can make them more common and more powerful than ever before.” If you keep growing the model, you’re going to run into big problems.”

Comments are moderated. Please keep your comments relevant to the article. Speech containing abusive or obscene language, personal attacks of any kind, or advertising will be removed and the user will be banned. The final decision is at the discretion of Taipei Times.