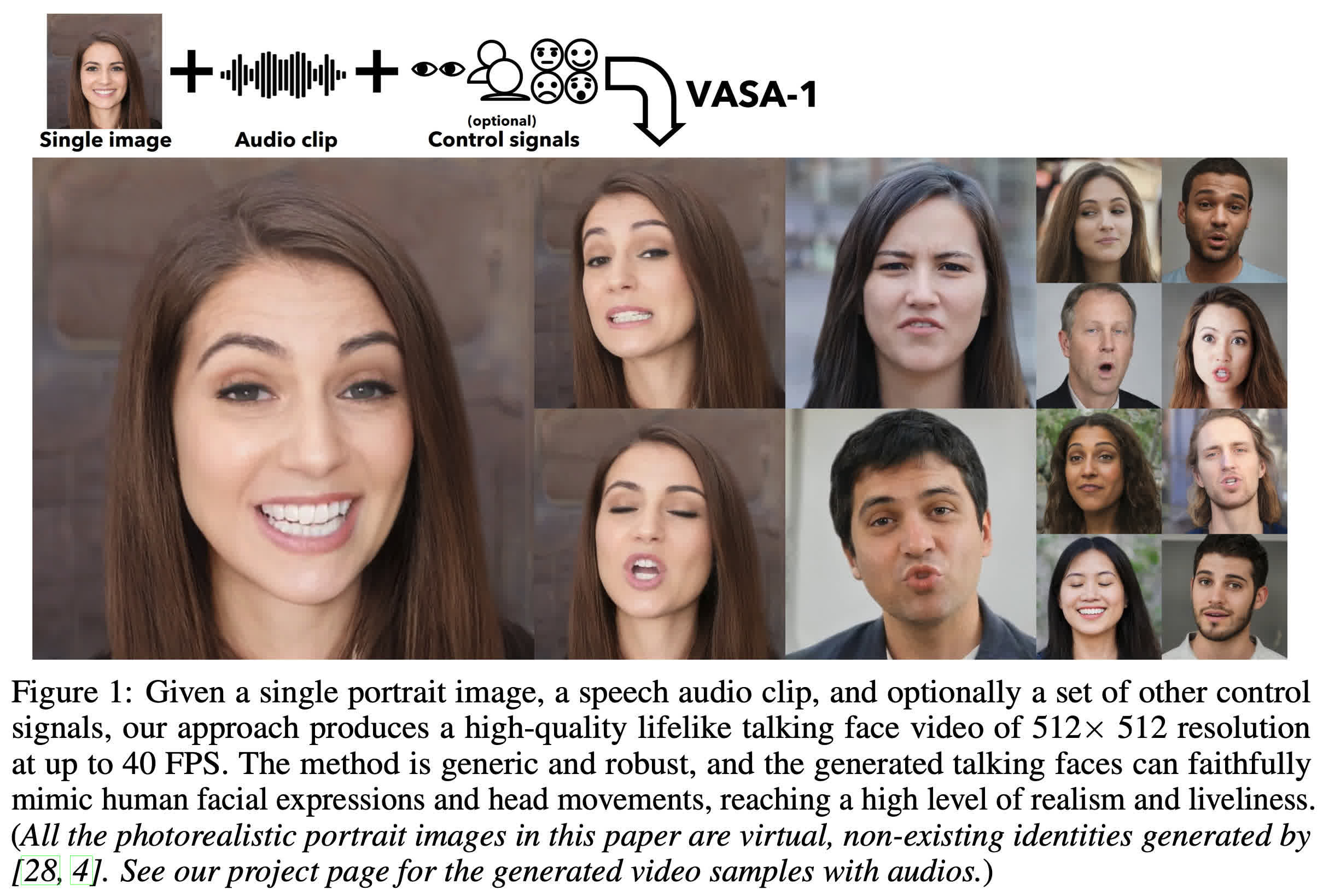

Through the viewing window: Microsoft Research Asia has released a white paper on generative AI applications in development. The program is called his VASA-1 and allows him to create highly realistic videos from just a single image of his face and a vocal soundtrack. What’s even more impressive is that the software can generate videos and swap faces in real time.

Visual Affective Skills Animator (VASA) is a machine learning framework that analyzes photos of faces, animates them into speech, and synchronizes lip and mouth movements to speech. It also simulates facial expressions, head movements, and even invisible body movements.

Like all generative AI, it’s not perfect. Machines still have problems with small areas like fingers and, in the case of VASA, teeth. If you pay close attention to your avatar’s teeth, you’ll notice that they change in size and shape, giving them accordion-like properties. This is relatively subtle and appears to fluctuate depending on the amount of movement going on within the animation.

There are also some manners that don’t seem quite right. It’s difficult to express them in words. It’s more like your brain realizes that something is slightly off from the speakers. However, it is only noticeable if you look closely. To the casual observer, the face may appear to be a recorded human speaking.

The faces used in the researchers’ demos were also AI-generated using StyleGAN2 or DALL-E-3. However, this system works with any image, real or generated. You can also animate the faces you paint or draw. Mona Lisa’s face when Anne Hathaway sings “Paparazzi” in Conan O’Brien is hilarious.

All kidding aside, there are legitimate concerns that malicious actors could use this technology to spread propaganda or try to trick people by impersonating family members. Given that many social media users post family photos on their accounts, it would be easy for someone to scrape images and imitate that family member. It could also be combined with voice cloning technology to make it even more convincing.

Microsoft’s research team acknowledges the possibility of an exploit, but does not provide a proper answer to counter the exploit other than careful video analysis. It points out the aforementioned artifacts while ignoring ongoing research and continuous system improvements. The team’s only concrete effort to prevent abuse is to keep it out of the public domain.

The researchers said they “do not plan to release any online demos, APIs, products, additional implementation details, or related products until we are certain that the technology is used responsibly and in accordance with appropriate regulations.” Ta.

However, this technology has some interesting practical applications. One is to use VASA to create realistic video avatars that render locally in real time, eliminating the need for bandwidth-hungry video feeds. Apple already does something similar to this with the Spatial personas available in the Vision Pro.

Check out the technical details in the white paper published in the arXiv repository. There are also more demos on Microsoft’s website.