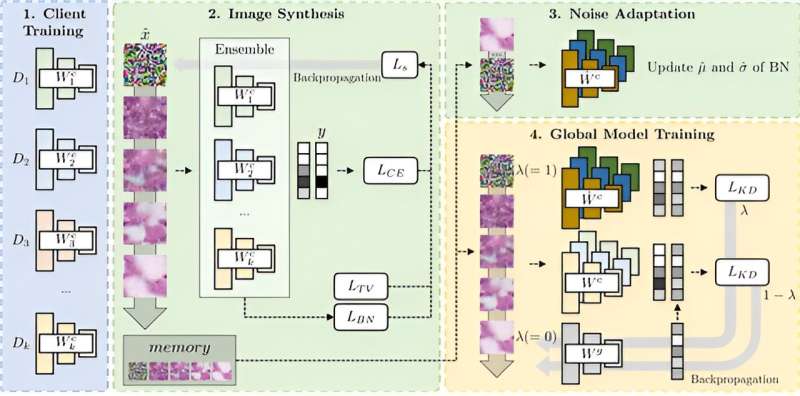

Model structure devised by the research team. Credit: DGIST

A research team led by Professor Sang-hyun Park of the Department of Robotics, Mechanical Engineering, and Artificial Intelligence at Daegu Kyungbuk University of Science and Technology (DGIST) developed federated learning AI technology in collaboration with a research team from Stanford University in the United States. Enables model learning at scale without sharing personal information or data.

Because the model can be learned efficiently, this technology, which can be used jointly by multiple institutions, is expected to greatly contribute to medical image analysis.

When training deep learning models in medical settings, the data includes patients’ personal information, so there are strong concerns about privacy violations. This makes it difficult to collect data from individual hospitals onto a central server in order to develop large-scale models that can be used jointly by multiple hospitals.

As a solution to this problem, Federated Learning does not collect data on a central server, but only collects the models trained at each hospital or facility and sends them to the central server for training. Nevertheless, I’m running into a problem sending the model over and over again to the central server. More importantly, for hospitals that need to securely store patient data, repeatedly transferring models to a central server can be costly and time-consuming. Therefore, the number of model transfers should be kept to a minimum.

Professor Park’s research team has successfully developed a method that minimizes the number of model transfers while maintaining and improving model performance through image generation and knowledge distillation. This method uses images and models generated by institutions to improve the model learning process by allowing the models to train on a central server.

The research team used this technology to perform classification tasks with microscopy, microscopic images, dermatoscopy, optical coherence tomography (OCT), pathology, X-rays, and fundus images. The results confirmed the superior classification performance of this technique compared to other traditional federated learning techniques.

Professor Park from DGIST’s Department of Robotics and Mechanical Engineering said, “This research will allow models to be trained universally at all institutions participating in the learning without sharing personal information or data. It is expected that large-scale development costs will be significantly reduced.” Scale AI models across a variety of medical settings. ”

Notably, this study was conducted in collaboration with a research team from Stanford University in the United States. In parallel to this study, Professor Park’s research team conducted other research in collaboration with Stanford University to generate realistic MRI images of the brain. This research allowed him to develop a conditional diffusion model that conditionally generates 3D brain MRI images by receiving a 2D brain MRI slide as input.

It is expected to be useful in the medical field because it can generate high-quality images with less memory than conventional brain MRI generation models.

Result is, Medical image computing and computer-assisted intervention (Mikkai).

For more information:

Myonkyun Kang et al., One-shot federated learning of medical data using image synthesis and knowledge distillation with client model adaptation, Medical Image Computing and Computer-Assisted Intervention—MICCAI 2023 (2023). DOI: 10.1007/978-3-031-43895-0_49

Provided by DGIST

Quote: Researchers develop AI technology for image recognition in the medical field (November 6, 2023) https://medicalxpress.com/news/2023-11-ai-technology-image-recognition-medical.html Retrieved April 12, 2024 from

This document is subject to copyright. No part may be reproduced without written permission, except in fair dealing for personal study or research purposes. Content is provided for informational purposes only.