Over the years, Google seems to have established a pattern for how we interact with the web. Search engines provide structured data formats and tools that can provide information to Google. Think meta tags, schema markup, disavow tools, and more.

Google then consumes and learns from this structured data spread across the web. Once enough learning has been extracted, Google will retire or de-emphasize these structured data formats, making them less influential or obsolete.

This circular process of providing structured data capabilities, consuming information, learning from it, and then removing or reducing those capabilities appears to be a core part of Google’s strategy.

This gives search engines a temporary boost to SEO and brands as a means of extracting data to improve their algorithms and continually improve their understanding of the web.

This article explains this “give and take” pattern through several examples.

Google’s “give and take” pattern

The pattern can be divided into four stages.

- structure: Google provides a structured way to interact with search snippets and its ranking algorithm. For example, meta keywords used to tell Google which keywords were relevant to a particular web page.

- Consume: Google collects data from the web by crawling websites. This step is important. Google can’t learn anything if it doesn’t consume data from the web.

- learn: Google will leverage new crawl data after the recommended structure is implemented. What was the reaction to the tools and snippets of code that Google proposed? Were these changes beneficial or were they exploited? Google can now change its ranking algorithm with confidence .

- retirement: Once Google learns what it can do, there’s no reason to rely on us to provide structured information. Search engines need to learn how to survive without these incoming data pipes, as leaving them open will inevitably lead to abuse over time. The structure proposed by Google has been deprecated in many, but not all, instances.

The trick is for search engines to learn from webmaster interactions with Google’s proposed structure before webmasters learn how to manipulate Google’s proposed structure. Google usually wins this race.

That doesn’t mean no one can take advantage of a new structure item before Google discards it. This simply means that Google typically destroys such items before the fraudulent activity becomes widespread.

Let me explain with an example

1. Metadata

Until now, meta keywords and meta descriptions played an important role within Google’s ranking algorithm. The first support for meta keywords within search engines actually predates Google’s founding in 1998.

The introduction of meta keywords was a way for web pages to tell search engines the terms that would allow them to find the page. However, such straightforward and useful code quickly became exploitable.

Many webmasters inserted thousands of keywords per page to get more search traffic than their fair share. This quickly led to the rise of low-quality websites filled with ads that unfairly converted the traffic they acquired into advertising revenue.

In 2009, Google confirmed what many had long suspected. Google says:

“The answer, at least as of now (September 2009) in Google’s web search results, is no. Google does not use keyword meta tags for web search rankings.”

Another example is meta descriptions, which are snippets of code that Google supported from the beginning. The meta description was used as a snippet of text below the link in Google search results.

As Google has improved, it has started ignoring meta descriptions in certain situations. This is because users may discover her webpage through various Google keywords.

If a web page describes multiple topics and a user searches for a term related to topic 3, displaying a snippet that includes a description of topic 1 or 2 won’t help.

As a result, Google started rewriting search snippets based on a user’s search intent, sometimes ignoring a page’s static meta description.

Recently, Google has shortened its search snippets and even confirmed that it primarily examines the main content of a page when generating descriptive snippets.

2. Schemas and structured data

Google introduced Support Schema (a form of structured data) in 2009.

Initially, we promoted “microformats” style schemas, which required markup of individual elements within HTML to provide structured and contextual information to Google.

Conceptually, this is actually not that far removed from the idea behind HTML meta tags. Surprisingly, a new coding syntax was adopted, as well as a more extensive use of meta tags.

For example, the idea of schema markup was originally (and still largely remains) to provide additional context information about data or code that had already been deployed. This is similar to defining metadata.

- “Information to help you understand or use other information”

Schema and metadata both try to achieve this same goal. Information that describes other existing information that can help users take advantage of such information. However, the details and structural hierarchy of schemas (eventually) made them much more extensible and efficient.

Today, Google still uses schemas for context awareness and details about various web entities: web pages, organizations, reviews, videos, products, and the list goes on.

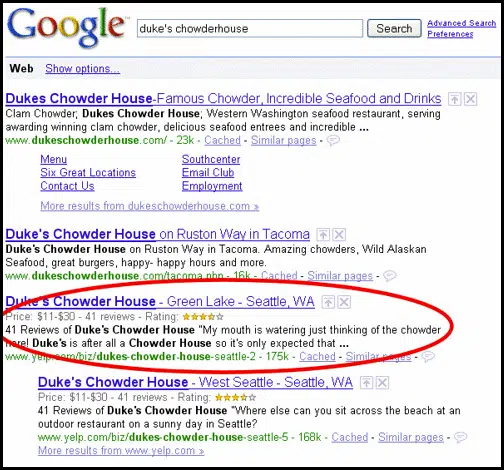

However, Google originally provided a high degree of control over the visuals of a page’s search list through schemas. Easily add star ratings to your Google search results pages to help you stand out (visually) against competing web results.

As usual, some companies started abusing these privileges to gain an advantage over less SEO-aware competitors.

In February 2014, Google started talking about penalties for rich snippet spam. This was a time when people would abuse schemas to make search results look better than other search results, even if the information behind the search results was wrong. For example, a site with no reviews claims that the total review rating is 5 stars (obviously false).

Fast forward to 2024, and schemas are still useful in some situations, but they’re not as powerful as they once were. Delivery is easier thanks to Google’s JSON-LD configuration. However, the schema no longer has absolute power to control the visuals of the search list.

Get the daily newsletter search that marketers rely on.

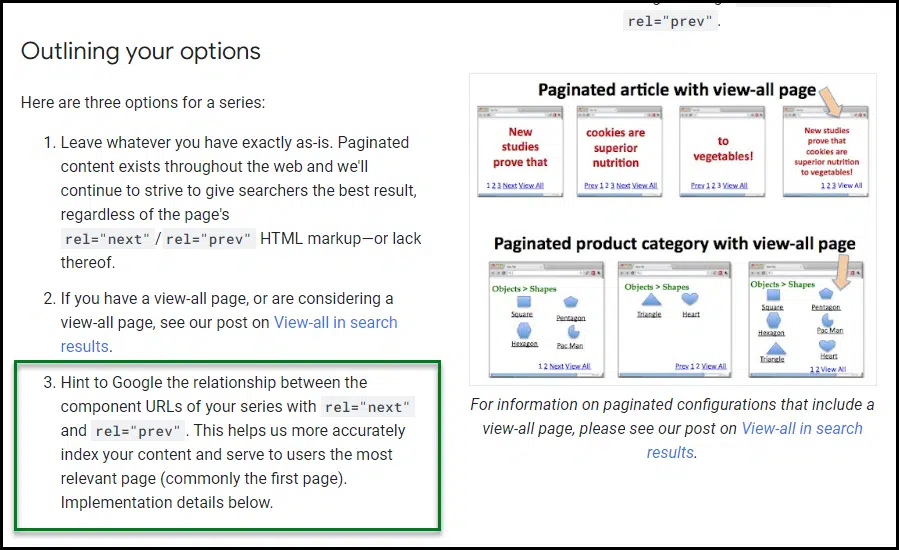

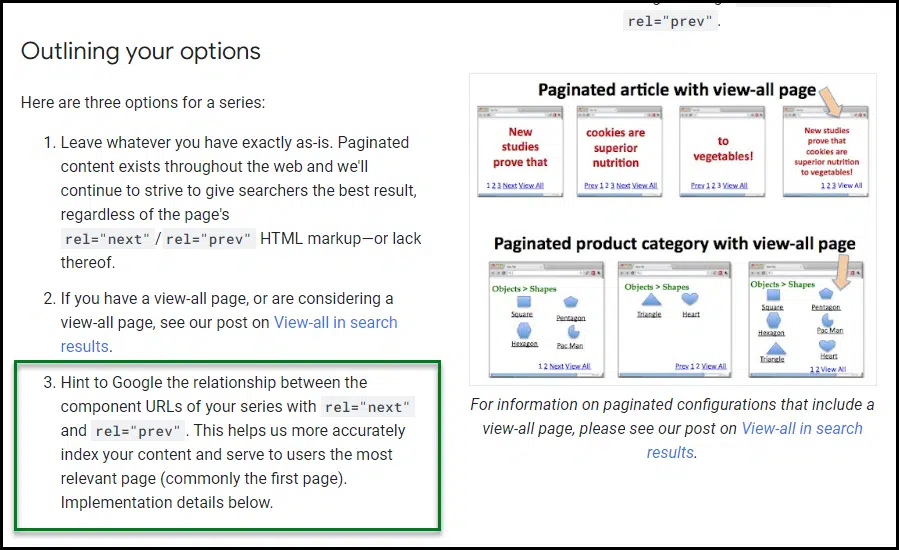

3. Rel=Previous/Next

Rel=”prev” and rel=”next” are two HTML attributes proposed by Google in 2011. The idea was to allow Google to develop a more contextual awareness of how certain types of paginated addresses are related to each other.

Eight years later, Google announced that it was ending support. He also said that he hadn’t supported this type of coding for a while, suggesting that support ended around 2016, just five years after it was first proposed.

It was no wonder that many people were frustrated, as tags were cumbersome to implement and often required actual web developers to recode aspects of the website’s theme.

Increasingly, it seems like Google will suggest complex code changes one minute and then ignore them the next. In reality, Google may have simply learned everything it needed to from the rel=prev / next experiment.

4. Disavow Tool

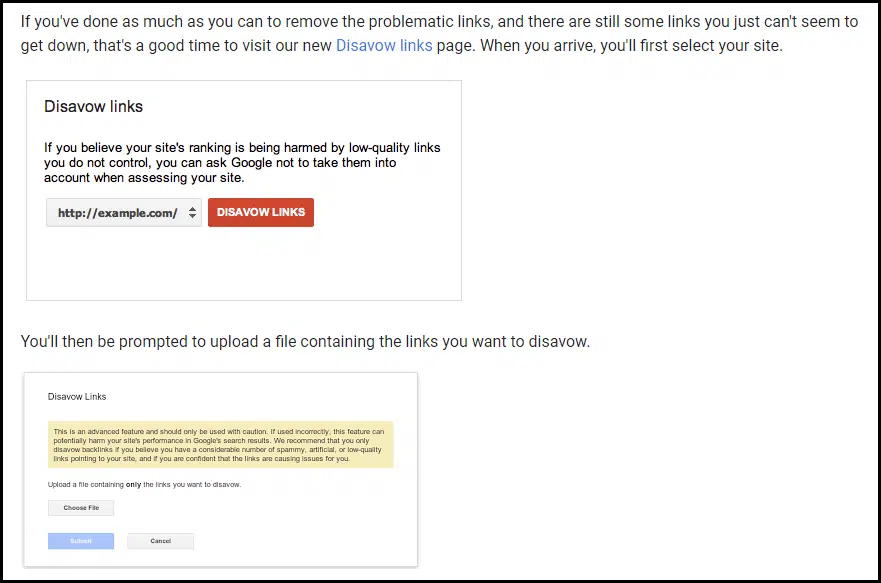

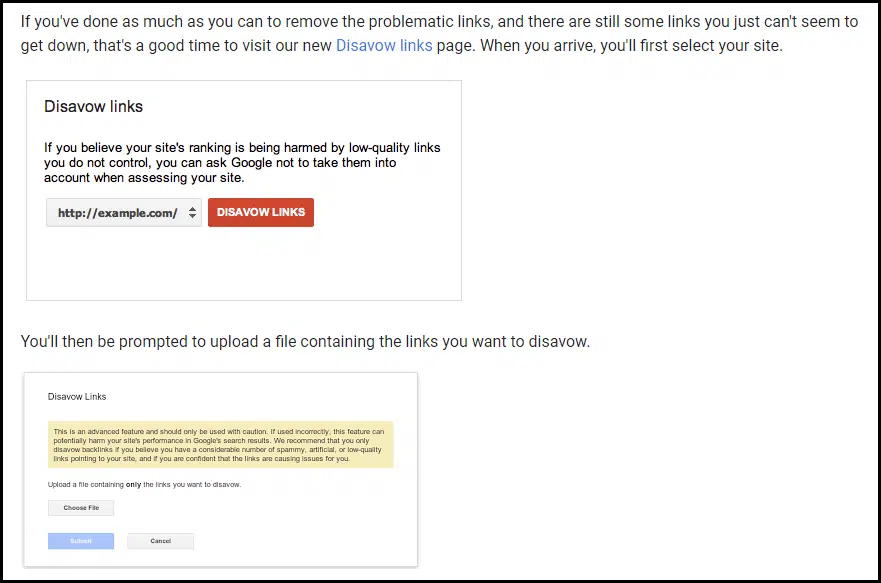

In October 2012, news of Google’s new link disavowal tool hit the web.

In April 2012, Google released the Penguin update, causing chaos on the web. This update focuses on spammy offsite activity (link building), and many websites will receive a manual action notification within Search Console (then known as Webmaster Tools). was displayed.

The disavow tool allows you to upload a list of linked pages or domains that you want to exclude from Google’s ranking algorithm. If these uploaded links closely match Google’s own internal assessment of your backlink profile, any active manual penalties may have been lifted.

While this obviously resulted in some “disavowed” parts of the backlink profile, the post-penalty traffic was typically lower than the pre-penalty traffic.

As a result, this tool received relatively low praise from the SEO community. Typically, backlinks were completely removed or a disavowal project was required. Less traffic after the penalty was better than no traffic at all.

Project repudiation hasn’t been necessary for years. Google currently states that those still offering this service are using outdated practices.

In recent years, Google’s John Mueller has been highly critical of companies that sell “disavowal” and “toxic link” works. It seems like Google no longer wants you to use this tool. True, they don’t advise us on its usage (and haven’t advised us for years).

Let’s dig deeper.Toxic Links and Disavowals: A Comprehensive SEO Guide

Unraveling the give-and-take relationship between Google and the web

Google provides tools and code snippets that allow SEOs to easily manipulate search results. Once Google gains insight from these introductions, such features are often phased out. Google grants us a limited amount of temporary control to facilitate long-term learning and adaptation.

Does this make small temporary releases from Google useless? There are two ways to find out.

- Some people may say, “.Don’t jump on the bandwagon! These temporary developments are not worth the necessary effort. ”

- Others say:Google gives us a momentary opportunity for control, so we should take advantage of it before it’s gone.”

The truth is, there are no right or wrong answers. It all depends on being able to effectively adapt to changes in the web.

If you’re used to quick changes, implement what you can and react quickly. It’s not worth following trends blindly if your organization lacks the expertise or resources to change quickly.

I don’t think this ebb and flow of give and take necessarily makes Google evil. Each company leverages its unique assets to drive further learning and commercial activities.

In this case, we are one of Google’s assets. It’s up to you whether you want to continue this relationship (between you and Google).

We could choose not to cooperate with Google’s temporary power, long-term learning trade agreement. However, this may place you at a competitive disadvantage.

The opinions expressed in this article are those of the guest author and not necessarily those of Search Engine Land. Staff authors are listed here.