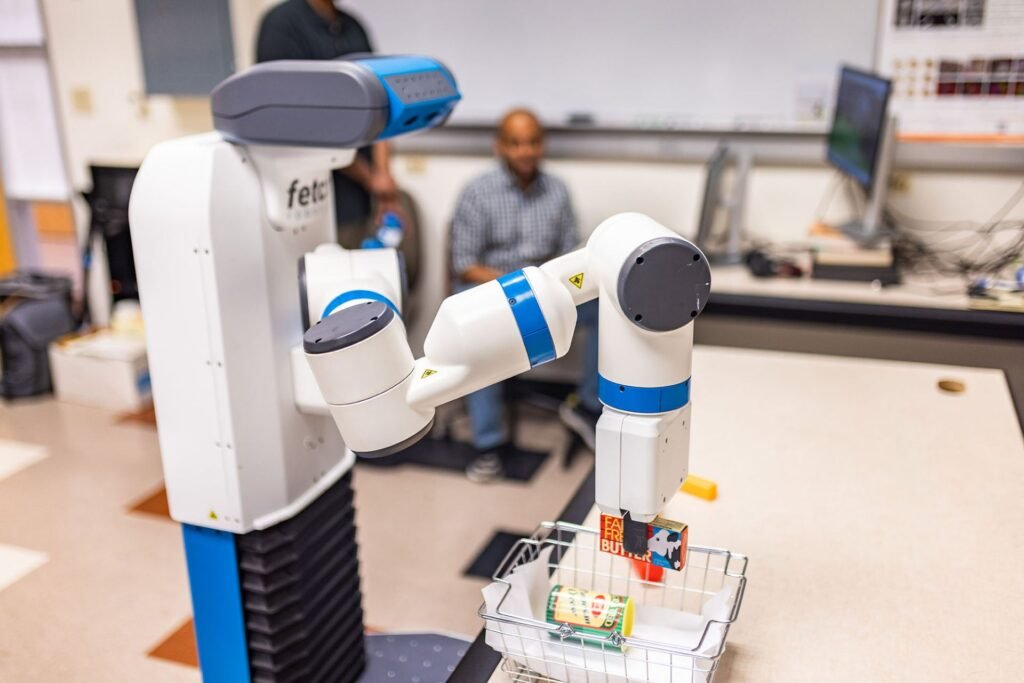

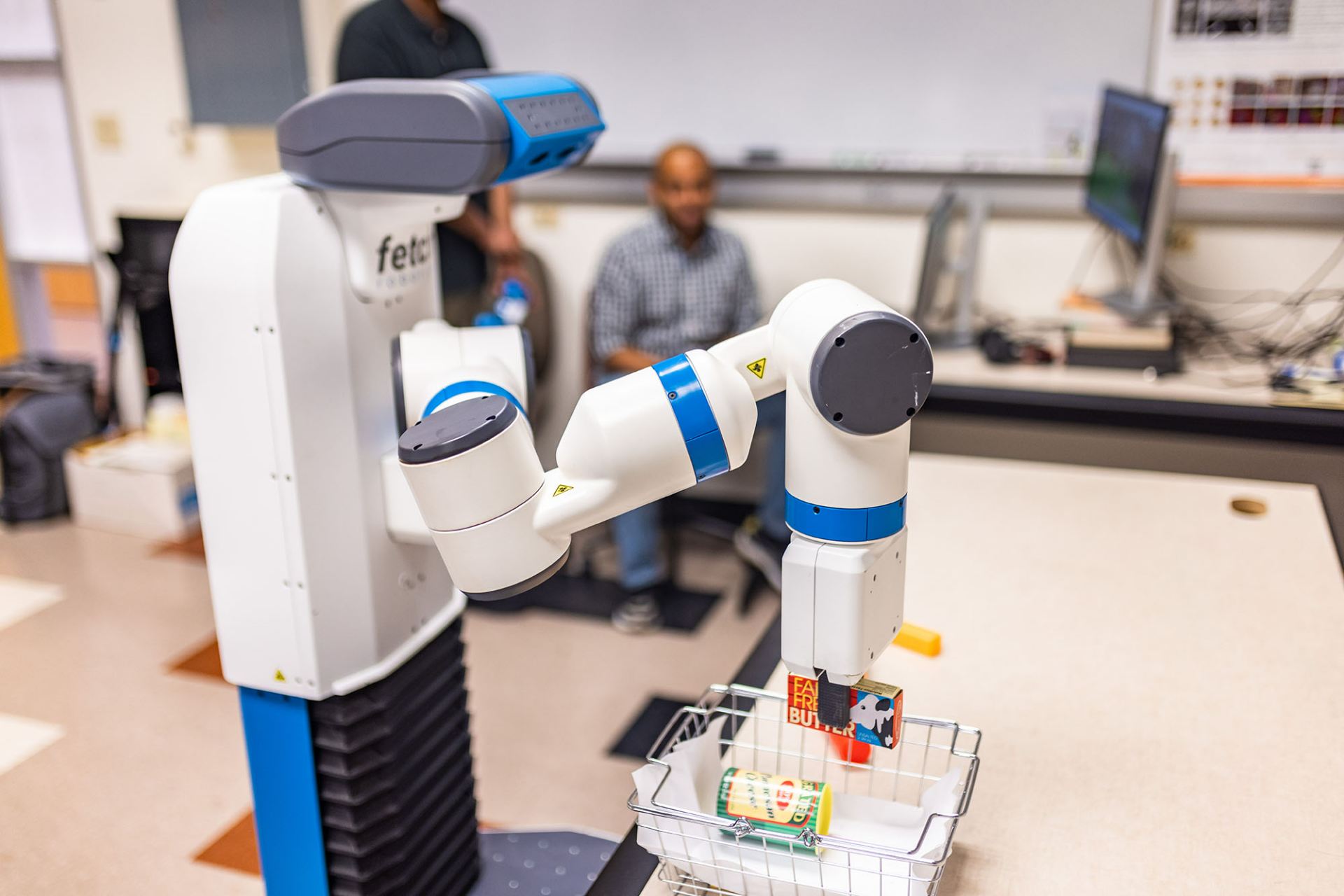

A robot moves a package of butter toys around a table in the Intelligent Robotics and Vision Lab at the University of Texas at Dallas. With each press, the robot learns to recognize objects through a new system developed by a team of computer scientists at the University of Texas at Dallas.

The new system allows the robot to press an object multiple times until a series of images are collected. This allows the system to segment every object in the sequence until the robot recognizes the object. Previous approaches relied on her single push or grab by the robot to “learn” the object.

The team presented their research paper at the Robotics: Science and Systems conference held in Daegu, South Korea from July 10th to 14th. Conference papers are selected for novelty, technical quality, importance, potential impact, and clarity.

Ninad Khargonkar, a doctoral student in computer science, worked on an algorithm to help Ramp make decisions.

We're still a long way from robots making dinner, clearing the kitchen table, and emptying the dishwasher. However, the research group has made significant progress in robotic systems that use artificial intelligence to help robots better identify and remember objects, said Dr. Yu Xiang, lead author of the paper.

“If you ask a robot to pick up a mug or bring you a bottle of water, the robot needs to recognize those objects,” said Shan, assistant professor of computer science in the Erik Jonsson School of Engineering and Computer Science. says Mr.

The UTD researchers' technology will allow robots to detect a variety of objects in environments such as homes and generalize or identify similar versions of common items such as water bottles of different brands, shapes, and sizes. Designed.

Inside Xiang's lab are storage boxes filled with toy packages of common foods such as spaghetti, ketchup, and carrots, which are used to train an experimental robot named Lamp. Ramp is a mobile manipulator robot from Fetch Robotics that stands approximately 4 feet tall on a circular mobile platform. On the lamp he has a long mechanical arm with seven joints. At the end is a square “hand” with his two fingers for grasping things.

Xiang said robots learn to recognize objects in a similar way that children learn how to interact with toys.

“After pushing the object, the robot learns to recognize it,” Xiang said. “Using that data, he trains an AI model so that the next time the robot finds an object, it doesn't have to press it again. By the time it finds the object the second time, it just picks it up.”

What's new in the researchers' method is that the robot presses each item 15 to 20 times, compared to just one press in previous interactive recognition methods. Xiang said that by pressing multiple times, the robot can take more photos with an RGB-D camera equipped with a depth sensor and learn more about each item. This reduces the chance of making mistakes.

“When you press an object, the robot learns to recognize it. We use that data to train an AI model so that by the time it finds the object the second time, it can pick it up.”

Dr. Yu Xiang, Assistant Professor of Computer Science, Eric Jonsson School of Engineering and Computer Science

The task of recognizing, differentiating, and remembering objects is called segmentation, and it is one of the key capabilities that robots need to complete their tasks.

“To our knowledge, this is the first system to leverage long-term robot interaction for object segmentation,” Xiang said.

Computer science doctoral student Ninad Khargonkar said working on the project helped improve algorithms that help robots make decisions.

“It's one thing to develop an algorithm, it's another thing to test it on an abstract dataset. It's another thing to test it on a real-world task,” Khargonkar said. “Seeing the performance in action was an important learning experience.”

The researchers' next steps could be to improve other functions, such as planning and control, to enable tasks such as sorting recycled materials.

Other UTD paper authors include computer science graduate student Yangxiao Lu. computer science seniors Zesheng Xu and Charles Averill; Kamalesh Palanisami MS'23; Dr. Yunhui Guo, Assistant Professor of Computer Science. and Dr. Nicholas Ruozzi, associate professor of computer science. Dr. Caillou Han of Rice University also participated.

This research was supported in part by the Defense Advanced Research Projects Agency as part of the Perceivable Task Guidance Program. The program develops AI technology that helps users perform complex physical tasks by providing task guidance using augmented reality to expand users' skill sets and reduce errors. . .

From left: Computer Science PhD students Sai Hanish Alu and Ninad Khargonkar, along with Dr. Yu Xiang, Assistant Professor of Computer Science, with Lamp, a robot they are training to recognize and manipulate common objects. I'm looking at it.