At its annual Worldwide Developers Conference (WWDC), Apple unveiled Apple Intelligence, a self-branded “personal intelligence system” that will be deeply integrated into its platforms.

What is Apple Intelligence?

By combining powerful generative models with personal context, Apple Intelligence aims to deliver useful, relevant information right where it’s needed.

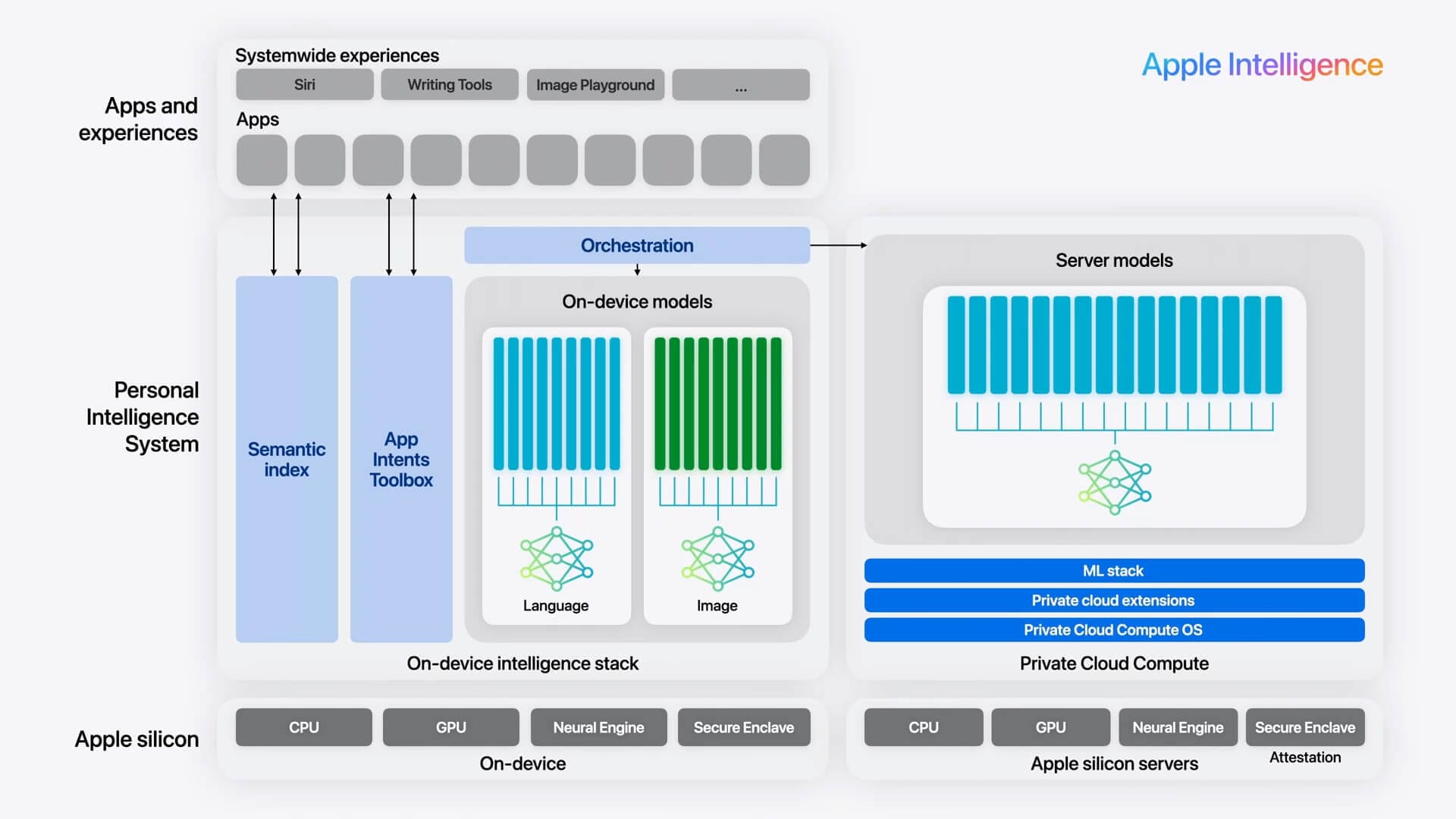

Apple Intelligence is built on a family of generative models created by Apple, including on-device and server-based models, diffusion models for image generation, and coding models. Additionally, Apple Intelligence can also leverage third-party models such as ChatGPT when more complex requests are required.

Here’s a quick overview of what we know so far about the two underlying models and how Apple Intelligence works.

On-device model

- size: Approximately 3 billion parameters

- Vocabulary size: 49K

- optimization: It employs low-bit quantization and grouped query attention for increased speed and efficiency.

- performance: On an iPhone 15 Pro, we achieve a latency to first token of 0.6ms per prompt token and a generation rate of 30 tokens per second.

Server-based model:

- Vocabulary size: Hundred thousand

- function: Use private cloud computing to handle more complex tasks and ensure privacy and security.

- optimization: Uses advanced techniques such as speculative decoding and context pruning to improve performance.

- safety: Built on a hardened subset of the iOS foundation, strong encryption and a secure boot process ensure the privacy of user data.

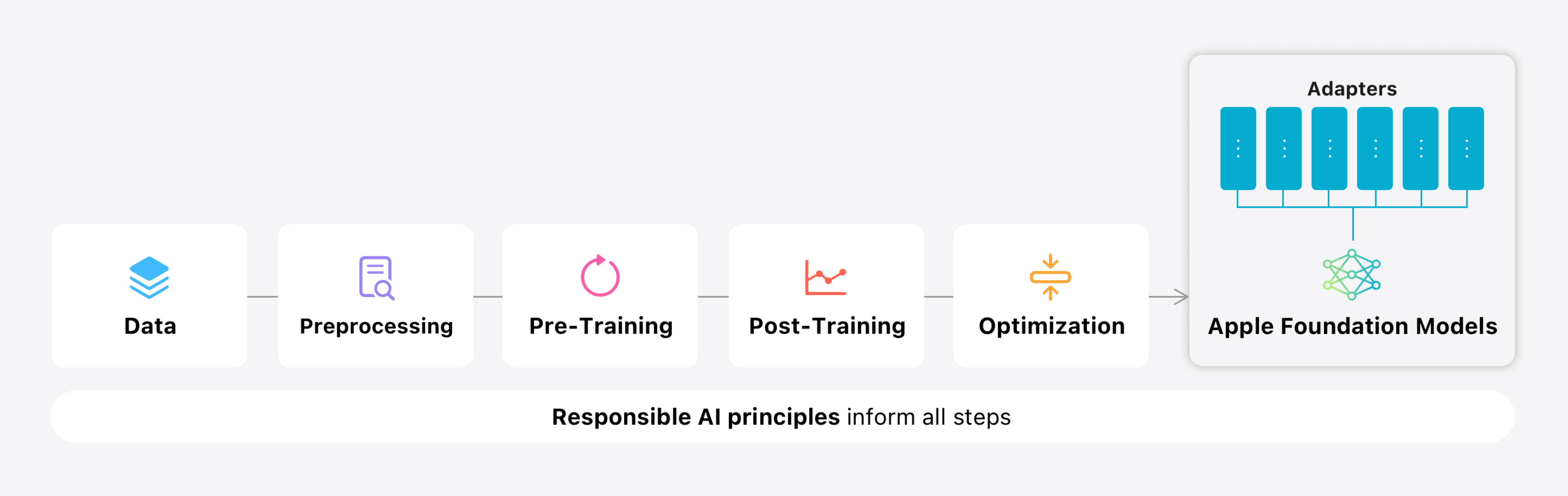

Apple’s models are trained on carefully curated datasets that do not contain any personal user information. The training data is a mix of licensed data, public data collected by AppleBot, and synthetic data. After training, Apple leverages new algorithms such as fine-tuning rejection sampling and reinforcement learning with human feedback to improve the model’s ability to follow instructions. Apple emphasizes that it does not use users’ private personal data or interactions when training the underlying models.

But the real magic happens at the optimization stage. Apple implemented a series of cutting-edge techniques to ensure optimal performance and efficiency on mobile devices. By employing techniques such as grouped query attention, shared embedding tables, low-bit parsing, and efficient key-value cache updates, Apple was able to create a highly compressed model that maintains quality while meeting the memory, power, and performance constraints of mobile devices.

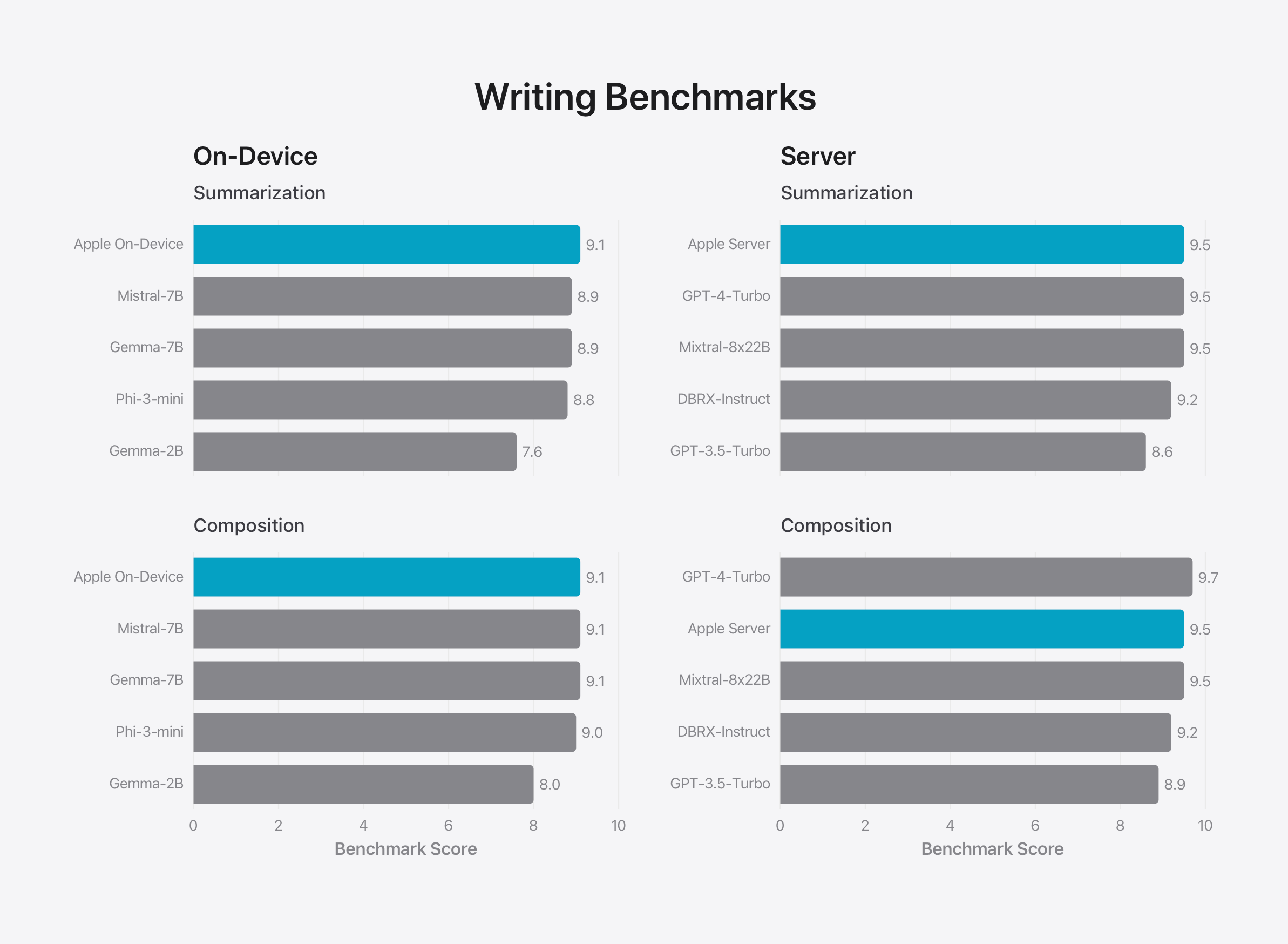

Unlike general-purpose models like Google’s Gemini Nano or Microsoft’s Phi, Apple’s model is fine-tuned to the everyday activities users need to do on their devices, such as summarizing, replying to emails, and proofreading. It does this using a technique called “Low-Rank Adaptation” (LoRA): small neural network modules that are plugged into different layers of a pre-trained model. This allows the model to adapt to different tasks while still retaining its general knowledge. Crucially, these adapters can be loaded and swapped out dynamically, allowing the underlying model to become task-specific on the fly.

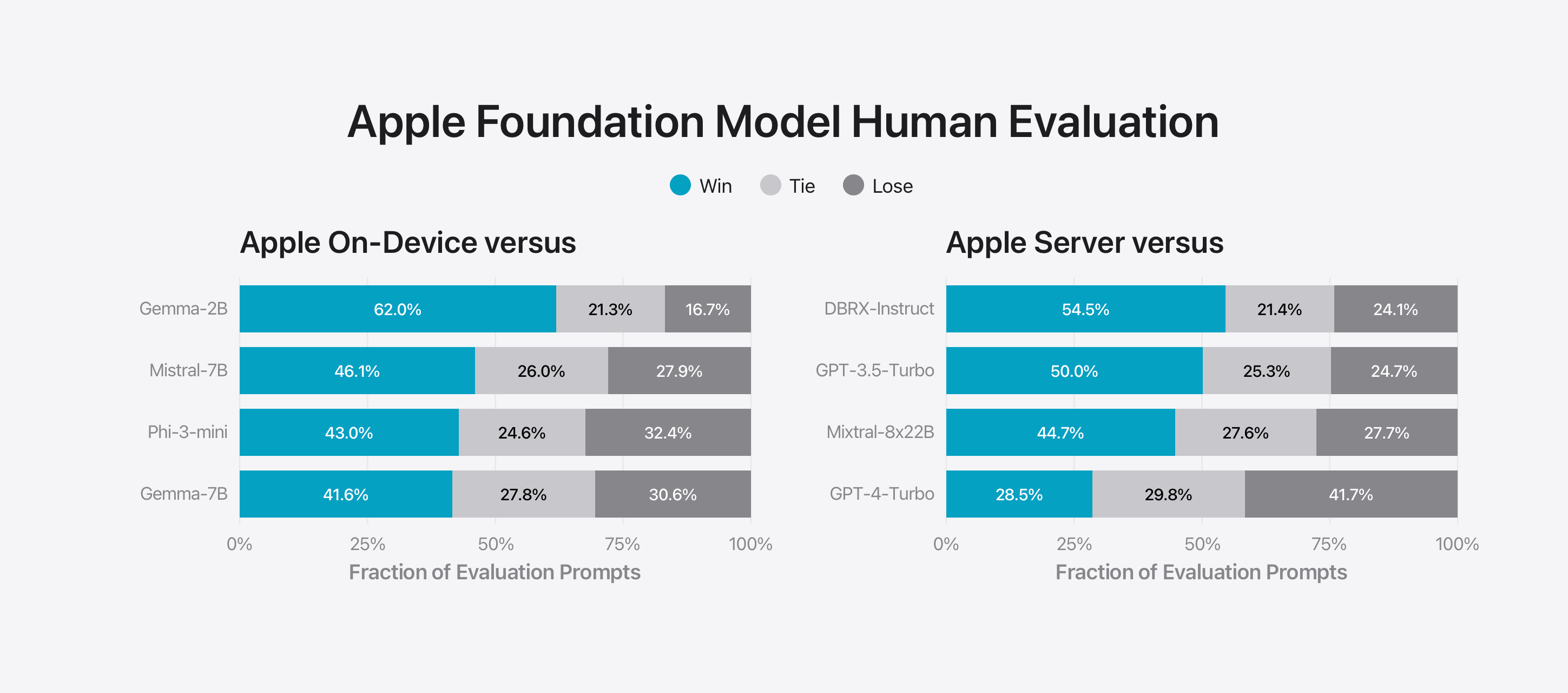

This makes it a bit difficult to compare Apple’s models to others: the company publishes performance ratings it ran on both the feature-specific adapter and the base model, but is careful about how it benchmarks its models.

Not surprisingly, Apple compared its models to those of open source and commercial competitors and found that human evaluators preferred its models for safety and usefulness. These results correlate highly with user experience, which is why Apple says it prioritizes human evaluation.

Apple said its models demonstrated robust performance when faced with adversarial prompts, achieving lower violation rates for harmful content, sensitive topics and factual content.

Overall, Apple’s on-device models are roughly on par with other small language models, while their server models are at around GPT-3.5 class. For the limited use cases that Apple Intelligence focuses on, this level of functionality is likely more than sufficient. This is likely why, despite the impressive evaluation numbers shared by Apple, they chose to partner with OpenAI to handle more complex requests.

Apple has put in a lot of effort to ensure that its AI models not only perform well but also run efficiently on mobile devices like the iPhone 15 Pro. While Apple’s approach to training and optimizing the underlying models is not revolutionary, its focus on efficiency, performance, and scalability appears to be achieving impressive results in delivering powerful, personalized AI experiences.